Uncovering AI's Mental Model

Posted on

A few weeks ago I wrote about how Anthropic uncovered where different ideas, and facts are stored inside a large language model. Well, last week they released the results of a follow up research where they show how an AI actually reasons and evidence that rather just writing text one token at a time, the AI actually plans ahead.

A few highlights from their research:

Concepts like “car” or “quantum mechanics” are stored inside an AI in a multi-lingual form

Claude 3.5 for example understands many human languages, English, German, French, Chinese,… even Swahili. But when an AI learns about “cars” in different languages, does the AI hold a different concept of what a “car” is for each language? The latest research by Anthropic suggests the answer is: no. The AI stores “car” and related facts and as an abstract feature which is then combined with a specific language feature when producing text.

LLMs plan ahead when writing

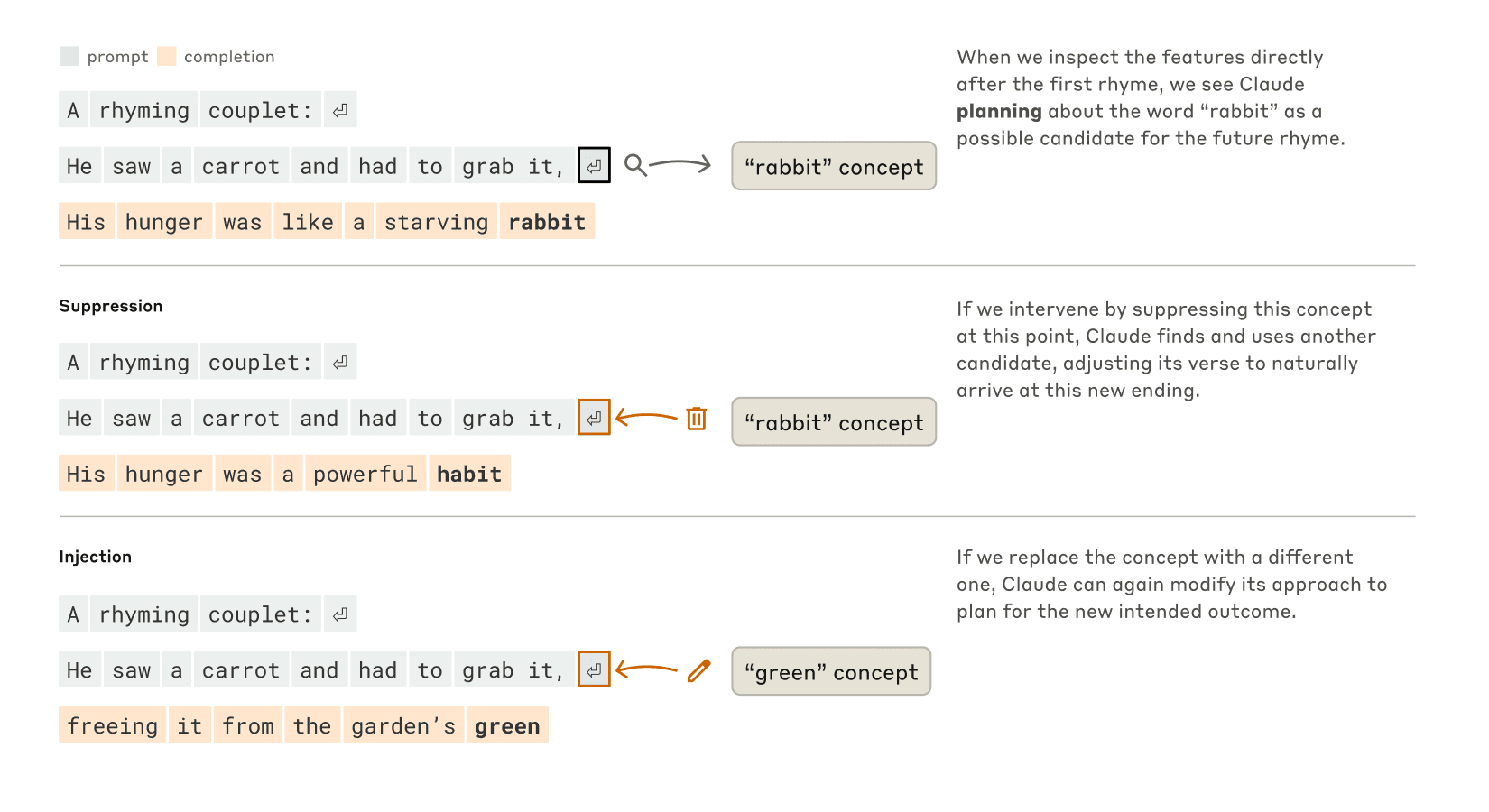

models like Claude a trained to create one token at a time, and this raises an important question: does the AI reason and plan ahead? By probing the brain of AI while it is writing poetry, the research found out that in fact, yes, the AI is planning it’s token long ahead. As an example, they took the first part of a poem:

He saw a carrot and had to grab it

then examined what the AI was thinking while generating the next sentence

He saw a carrot and had to grab it

His hunger was like a starving rabbit

before writing the first token the AI already internally selected “rabbit” as a good candidate to end the rhyme with. Next, the researchers decided to artificially suppress “rabbit” and found that the AI when for the next best option, “habit” and completed the rhyme to match that instead:

His hunger was a powerful habit

AI does mental math differently from it claims to do

While AI has always been notoriously bad at arithmetic and math, they are slowly improving. For simple mental arithmetic such as calculating 36+59, AI is mostly correct these days and one open question was whether that AI simply memorized it from training, if it actually calculates it internally or does something else entirely.

From a few experiments, Anthropic researchers found out that in the AI’s brain it combines two internal concepts and reasonings: 36+59 should be roughly 90, and that 6+9 should end with a “5”. hence the final answer 95. Surprisingly, when asking the AI to elaborate and explain how it came up with the answer, it does not seem to be aware of it’s internal process and instead will explain a simple algorithm.

My thoughts

Although I understand the building blocks of AI: transformers, activations, tokenization… how it actually understands my prompts was always an uncomfortable black box for me. It is interesting to see that we’re starting to dissect an AI’s brain in a very similar way to how we do it in neuroscience experiments where we attach electrodes to an animals brain and measure electric responses. Except in this case we can do it much faster and much more controlled because we can monitor any neuron of the AI. In the blog of Anthropic they call this “AI biology”.

What surprised me most, is how natural these insights sound. Somehow by training on predicting the next token, the AI actually managed to learn concepts and it’s internal thinking feels so human. In the Netherlands we have a yearly tradition of writing silly rhyming poems during Sinterklaas (our version of Christmas) and my poem writing strategy is exactly the same as what Claude does.

I’m curious to see if the same approach can shed more light on why AI hallucinates. Is it a “conscious” decision, does the AI get confused because it needs to choose between different internal mental concepts, or maybe because when generating a long answer it simply forgets and fakes its confidence instead? Although the research does touch this topic slightly (Claude has an internal on/off concept that decides whether to answer a question or reply “I don’t know”), it doesn’t explain me why it gives a wrong answer while I’m 100% sure it does know the correct one :’)

looking forward to future research on this topic!