Do You Understand Me? Human and Machine Intelligence

Posted on

By Lan Chu and Robert Jan Sokolewicz.

Terminologies

In this article, we try to explore the notion of intelligence and what it means for computer programs to be intelligent. We will be dancing between different terminologies and concepts often discussed in the context of human cognition such as Understanding, awareness, intelligence, and consciousness. Even though the meaning of these terms is somewhat mysterious, we try to refer to them based on the normal usages:

- Intelligence means the ability to learn, understand, reason, solve problems and make decisions.

- Understanding is a process where a person is able to comprehend or grasp the meaning or nature of something.

- Reason is the ability to apply logic or make logical connections between events based on new or existing knowledge.

- Awareness is a conscious recognition of something. It is about being cognisant of the elements in the environment and their relevance to the situation at hand.

The link between these concepts:

- Understanding is a component of intelligence. You would not say someone is intelligent without actual understanding. Understanding supports intelligent behavior.

- Awareness is a state that enables reasoning and understanding

- Consciousness is the most complex and least understood topic. To us, it is often considered the totality of experience, it encompasses awareness, understanding. and it is what allows humans to have subjective experiences.

Before the AI time

Before the rise of AI, we had basic computers whose mundane tasks consisted of running scientific calculations.

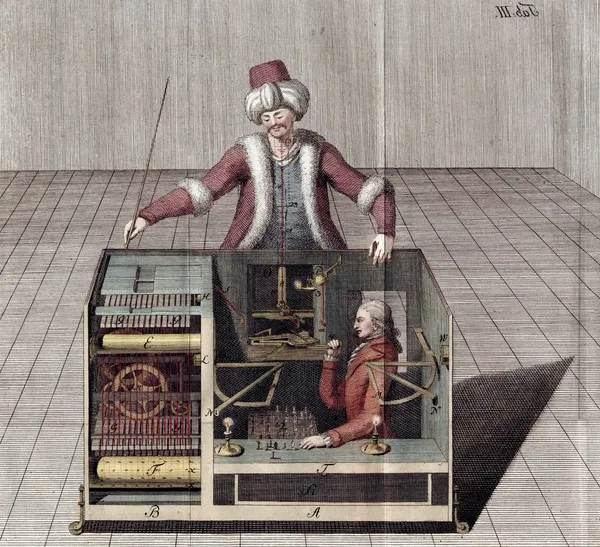

Before the computer, however, there were automata — machines that could operate on their own, designed to execute a series of predefined actions.

One well-known example is the Digesting Duck by Jacques de Vaucanson from 1764. Made out of copper, the duck could quack, eat and seemingly digest food.

Some of these automata were so advanced and well-designed that they could mimic some human behaviors. The most famous example is the Mechanical Turk, constructed and debuted in 1770, who appeared to be able to play chess. This machine toured around Europe and the United States of America for over eight decades. It was later revealed, however, that the Mechanical Turk was not autonomous but was secretly operated by a skilled chess player from inside of the machine. So, even before modern computing, we were already fascinated and thinking about artificial intelligence in some sense.

The AI Effect and the Moving Target of Definition

Impressive. Data sets are now so rich, and processing is so quick. However, I plead with folks to stop calling this AI. It is not that. Yet. — A Redditor

There is a paradox in online communities like Reddit and Twitter that whenever something AI-related is posted, there are always comments stating that it is either “not AI”, or “not true AI”. It seems that whenever new AI-related technology becomes the new normal, it is no longer AI. This is the so-called AI effect.

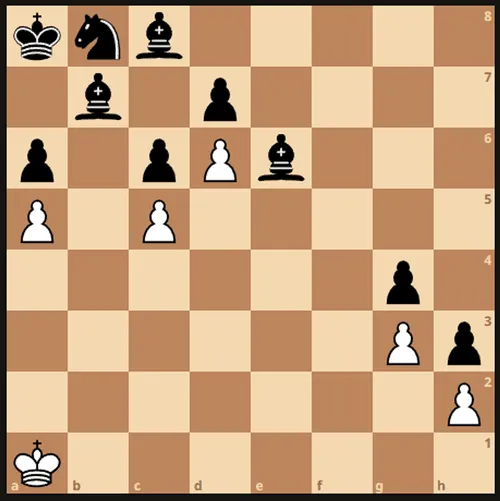

In 1996, when IBM’s Deep Blue supercomputer defeated Garry Kasparov, the then reigning world champion in a game of chess, it was considered a giant feat in the development of AI. If a machine behaves as intelligently as a human being, then it is as intelligent as a human being, right?

No actually. Many people were less enthusiastic, including John McCarthy, one of the “founding fathers” of artificial intelligence, who viewed it as nothing more than brute computation rather than genuine intelligence.

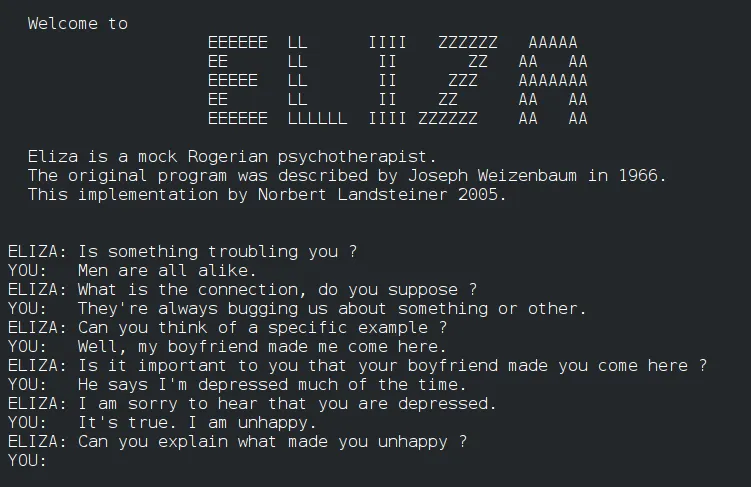

Even long before that, back in 1966 one of the first chatbots ELIZA, created by MIT computer scientist Joseph Weizenbaum was able to “fool” some users into thinking that the chatbot showed true understanding and compassion and that it is “hard to convince that ELIZA is not human”.

However, this is a misguided interpretation that Weizenbaum later attempted to correct. The machine simply used a predefined script together with pattern matching and substituting parts of the answer of the user into its response. If a keyword is found, a rule that transforms the user’s comments is applied, and the resulting sentence is returned.

The definition of AI is a moving target, subject to constantly being re-defined. As technology advances and AI systems become more capable, there is a rising expectation for what should be called AI. Tasks that were once considered AI, like computer playing chess, become a new normal once they are achieved and the bar is raised higher.

When is something truly called AI?

1. AI as a research field

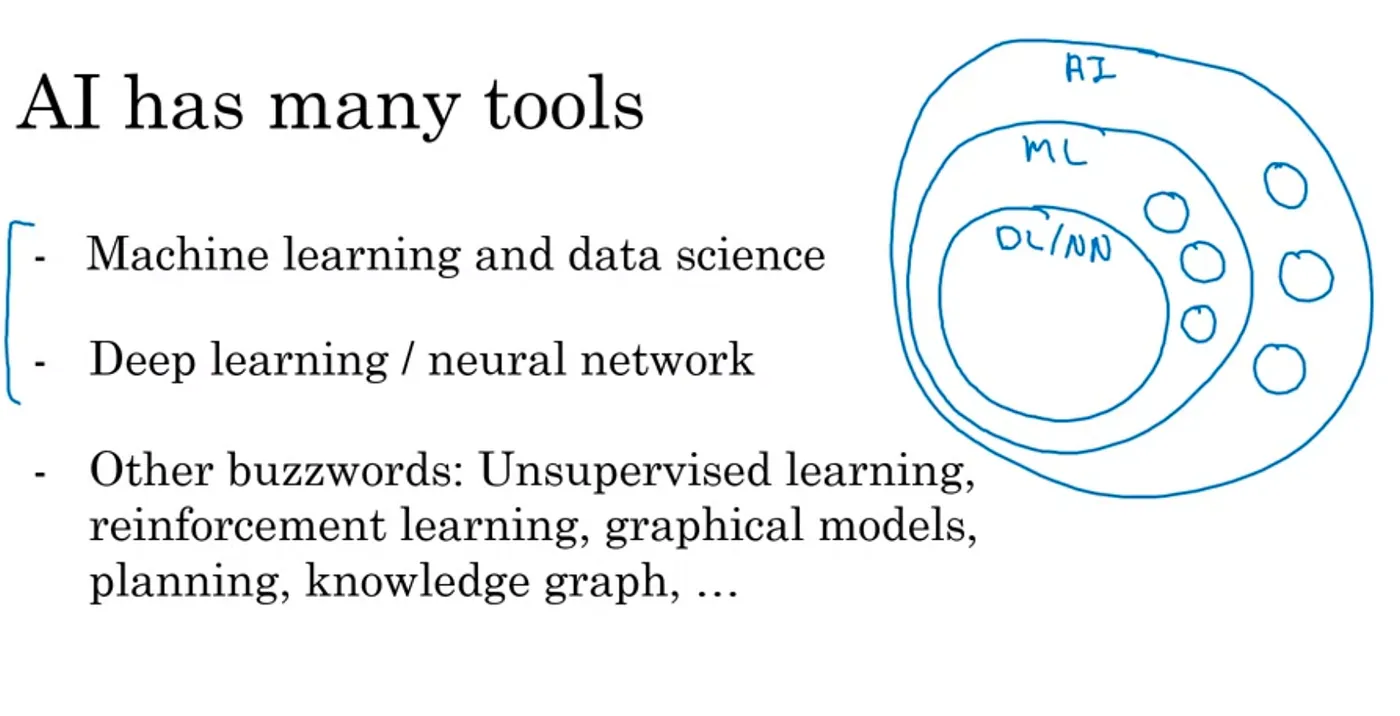

There does not seem to be a universal definition for the term “AI”. Nevertheless, many of us would agree that AI is a field of research that makes machines learn and behave intelligently. AI as a research field, is rather well defined. For example, Andrew Ng defines it:

AI is a set of tools for making computers behave intelligently — Andrew Ng

AI is the science of making machines do things that would require intelligence if done by man -Bertram Raphael, first AI PhD student.

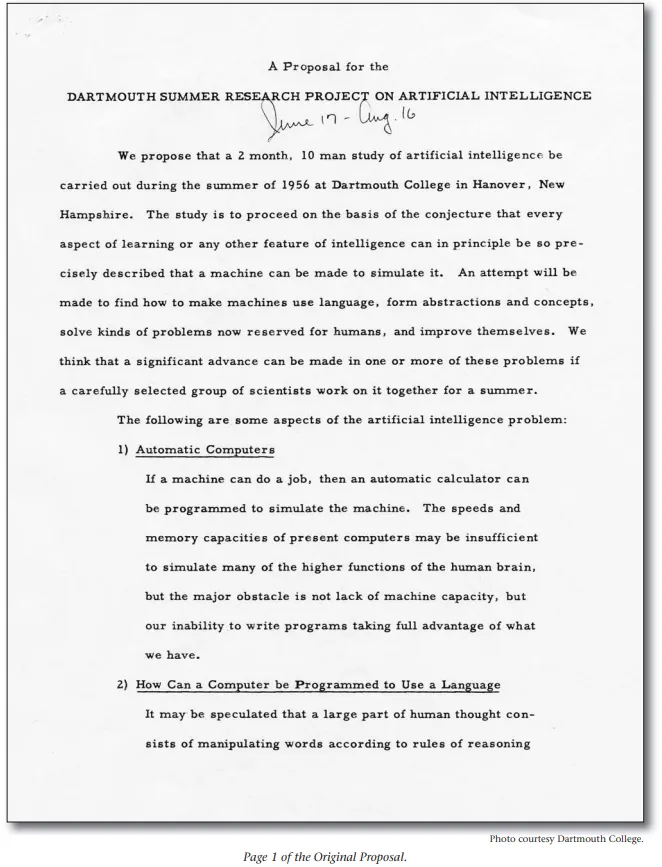

and this definition is similar to how it was defined in the Darmouth’s proposal:

”making a machine behave in ways that would be called intelligent if a human were so behaving.”

These above-mentioned definitions, however, do not tell us how we might create a machine or computer to behave intelligently. Moreover, it doesn’t say what it means to be or to behave intelligently.

2. AI as in the notion of “Intelligence”

Strong AI vs Weak AI

The catch here is the term “intelligence”.

Because when people say “This is not AI”, they usually don’t refer to the field of AI, but to the notion of intelligence. We will see that the notion of intelligence becomes tricky when we focus less on what the AI does, but more on how it does it.

The field of AI has two directions, “Strong” and “Weak”.

Strong AI is associated with the Massachusetts Institute of Technology (MIT) and is focused on creating a general artificial intelligence that can understand, learn, and apply its intelligence broadly across different contexts, much like a human being. A strong AI would possess self-awareness, understanding, and the ability to think and solve problems, learn new tasks, and transfer knowledge between different domains. This type of AI is mostly theoretical and does not exist yet.

On the other hand, weak AI is often associated with Carnegie Mellon University (CMU). These systems are “weak” because their intelligence and understanding are limited to their programmed area of expertise. They do not possess self-awareness or genuine understanding. Examples of weak AI include chatbots, recommendation systems, and voice assistants. They appear intelligent within their narrow domain of tasks but cannot operate beyond it. All algorithms related to machine and deep learning, for example, fall in this category. GPT family models are not exceptions. They are trained to predict the probabilities of the next words. The proponents of weak AI measure the success of the systems based on their performance alone. According to them, AI research is to solve difficult problems regardless of how they are actually solved.

Functionalism versus Instrumentalism

On one side of the spectrum, we have the functionalism/instrumentalism view point which cares more about the outcome, rather than how a problem is solved.

This bears similarities with the “weak AI” school of thought. On the other side of the spectrum, for a system to be called AI, it should solve problems intelligently, similar to how humans do. This is referred to as biological plausibility, the extent to which an algorithm mimics the principles of biological neural processing. This could mean using learning rules that are similar to those observed in the brain, such as Hebbian learning, or designing network architectures that are inspired by the organization of the cortex.

For example, it is common to include a scheme in which a chess program remembers the games it played previously and adjusts its strategies from the previous play in which it lost. It is, however, far more challenging to program a chess machine that can understand strategic moves, control the flow of the game, and force the opponent to be on the defensive side. In other words, there is a clear distinction between a machine that appears to be intelligent by mimicking intelligent behavior and one that is genuinely intelligent by having true cognitive capabilities.

If you play chess, you will know that the position in the example above is a very clear draw. Although black has a material advantage (3 bishops and a knight), he cannot capture any pieces. White cannot capture any of the black pieces either because of the bishop on e6. A strong chess computer, however, will evaluate this position as winning for black. To prevent the game from ending in a draw (which is worse than winning), black will sacrifice its bishop, giving white the advantage to win the game. That is why it is not about following the rules on how it is programmed, but computer systems need to understand what the rules mean because that would give them the power to go beyond the rules.

AI as a topic of debates

Computer Scientists like John McCarthy and Marvin Minsky are the advocates for Strong AI direction. After Dartmouth’s workshop, in 1959, John McCarthy — one of the founding fathers and a most influential person in AI who has devoted most of his life to this field, wrote “Program with common sense” which was the first paper ever that proposes common sense reasoning ability as the key to AI systems. In his work, McCarthy introduced a set of conditions for a program that is to evolve into the intelligence of a human. The first focus is that, for a system to be capable of learning something, it must “first be capable of being told it”. This means the system should be capable of being instructed/trained and then capable of learning from experience and modifying its behavior, akin to humans**.** McCarthy suggested that the AI would be able to derive or deduce immediate logical outcomes from any new information it receives, combined with what it already knows.

One will be able to assume that the AI will have available to it a fairly wide class of immediate logical consequences of anything it is told and its previous knowledge — Program with Common sense, John McCarthy.

In an interview with Jeffrey Mishlove, John McCarthy suggested that AI research had and still has difficult conceptual problems — which is, as I understand it, the need for AI systems to be able to understand, reason, and act upon the realm of common sense knowledge that humans possess.

Humans inherently own a vast amount of “common sense” knowledge that we take for granted, i.e understanding basic physical properties like if you drop a ball, it falls. Therefore, the central issue of AI is developing a structured way to express and encode human common sense and reasoning in a form that is understandable for computer systems. These conceptual challenges should be solved before we can create computer programs that are deemed as intelligent as humans and with human-like common sense reasoning.

Of course machines can think; we can think and we are ‘meat machines’.— Marvin Minsky

However, giving the computer genuine understanding is not close to being solved. Dealing with common sense has been proven to be difficult despite humans being able to do it easily. A child can recognize speech because that ability is built into humans. But a human has a difficult time understanding where that ability comes from and how to make computer programs do the same. Some conceptual breakthroughs in understanding human’s brain and how it works have to be made.

Ultimately, we want to see computer systems with genuine cleverness, rather than executing predetermined rules like a mindless machine. John McCarthy’s position was that we need systems that incorporate “real” understanding and, hence, intelligence.

I don’t see that human intelligence is something that humans can never understand. — John Mccarthy

But not everyone was convinced. The philosophical implications are profound. Joseph Weizenbaum -creator of the first chatbot ELIZA — believed that the idea of humans as information processing systems is “far too simplistic a notion of intelligence” and that it drives the “perverse grand fantasy” that AI scientists could create a machine that learns “as a child does.” For Weizenbaum, we cannot humanize AI because AI is irreducibly non-human. We should never “substitute a computer system for a human function that involves interpersonal respect, understanding, and love”.

No human being could ever fully understand another human being. If we are often opaque to one another and even to ourselves, what hope is there for a computer to know us?” — Weizenbaum

Hubert Dreyfus, a philosopher, said that some people “do not consider the possibility that the brain might process information in an entirely different way than a computer”. In his work “What Computers Can’t Do”, Dreyfus stressed that human cognition is fundamentally different, relying on unconscious and subconscious processes, while machines merely follow explicit algorithms and make decisions based on the data they have been fed. They don’t “experience” the world or have subconscious influences. They lack the deep understanding that comes from lived experience and subconscious processing and that is philosophically impossible.

The AI controversy: Is Linear Regression AI?

When you study machine learning, one of the first examples you will learn is solving linear regression. Some argue that regression models cannot be AI, but only deep learning can be AI. This suggests that calling an algorithm or a system AI is related to how it solves a problem. Many people seem to have made a conscious decision to call things “AI” when and only when it is deep learning/neural networks.

Second, we’re just feeding into this stupid hype cycle by calling everything AI. If you call your linear regression AI, you’re also supporting a race to the bottom in terms of what the phrase means. — A Medium-er

By redefining the method of least squares as a machine learning problem, solving linear regression becomes minimizing errors (RMSE or MSE). The origin of linear regression, and the least squares method, however, dates back to the end of the 19th century when Sir Francis Galton solved the problem using normal equations. His solution exists long before the dawn of artificial intelligence, and does not even use any “learning”.

(Un)fortunately, solving a fit with a normal equation is computationally inefficient. Using an iterative optimization approach called Gradient Descent that incrementally tweaks the model parameters to minimize the cost function iteratively proves to be a much more efficient way.

To answer the question if linear regression can be considered AI we first need to know which method is being used. Do we solve it using the normal equation? If so, then obviously, there is no “learning”… It’s more of letting a machine crunch the numbers. Do we use Gradient Descent to find the “best fit” line for the data? Then I think, yes, it is machine learning. So, quite often, when we are fitting a line to data, we are actually using machine learning.

Yet, the overarching question remains: can we consider this AI? The original definition, as proposed by Dartmouth summer school, along with many contemporary definitions, suggests that linear regression is indeed AI. It can “learn” and adapt, and it produces results as if an intelligent human being has made it. However, based on McCarthy’s theory of intelligence, it is clear that linear regression doesn’t either rely on common sense reasoning or having an understanding of the world around it.

Is drawing a line by minimizing errors intelligence? Does gradient descent resemble thinking and consciousness? Some say yes, some say No.

The choice is yours.

A fascinating theory of Consciousness

Consciousness originates from microtubules within neurons

While building a machine truly intelligent as humans sounds philosophically impossible to some people, there might be some hope.

Consciousness is generally believed to emerge from the brain’s activity, but how it arises from the physical processes within the brain is still not well understood.

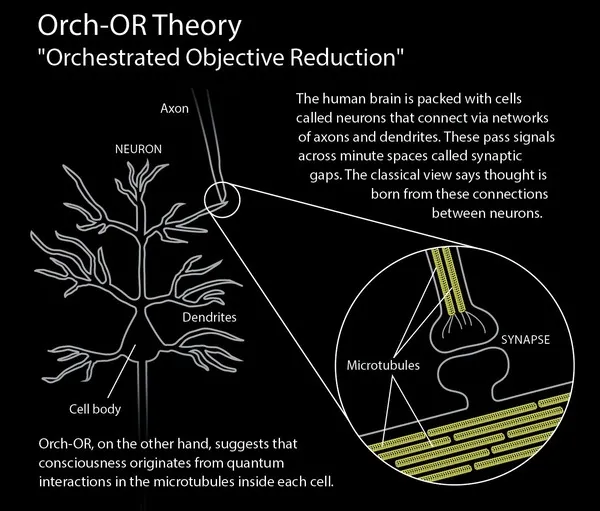

One common view in neuroscience is that communication between neurons at synapses is fundamental to the emergence of conscious experience. Synapse is the junction between neurons and has the primary function of transmitting signals from one neuron to another. Most neuroscientists focus on the synaptic neural network activity when studying consciousness and consider the brain’s computational power as a product of synaptic connections. These views often see brain activity as a computational process.

However, Stuart Hameroff — an anesthesiologist and professor, and Roger Penrose — a mathematician and physicist and the then Nobel prize winner in Physics, discussed another fascinating theory of consciousness called Orch OR.

According to Roger Penrose, the central of consciousness dances between three concepts: intelligence, understanding, and awareness. You would not call something intelligent if it does not understand. And to be able to understand, it needs to be aware. If understanding can be proved to be something beyond computation, then intelligence is not a matter of computation.

The Orch-OR theory suggests that consciousness originates at microtubules — which are structural components within neurons that are part of the cell’s cytoskeleton instead of at Synapse.

According to this theory, microtubules could be the site of quantum effects that lead to consciousness, which is a departure from the traditional understanding of neural processing that consciousness comes from synapses.

Whatever consciousness is, it is not a computation — Roger Penrose

It actually started a long time ago when Roger Penrose published the book “The Emperor’s new mind” in which he discussed that he needed a quantum computer in the brain. Stuart Hameroff was mind-blown by the book and suggested Roger Penrose look at Microtubules. They later teamed up and built the fascinating Orch-OR theory. He suggests that perhaps the process that leads to quantum state collapse, is the same process that leads to consciousness.

Throughout his career, Stuart Hameroff has studied the mechanism of how anesthesia works and has proposed a theoretical mechanism that anesthetics erase consciousness by dampening quantum vibrations in microtubules inside brain neurons. This is a truly exciting argument to believe that there is a link between consciousness and microtubules. Another supportive argument is that when the microtubules fall apart, people get Alzheimer’s disease.

So what are the properties of microtubules?

What are the properties of microtubules that can be linked to consciousness?

Well, I am not sitting here and pretending that I understand neuroscience and quantum physics, but let’s at least give it a try 😊

The idea is that microtubules with symmetrical structures may play a crucial role in consciousness because they can preserve much better quantum states — which is the transition from quantum superposition to a single outcome.

Superpositions in quantum mechanics

In quantum mechanics, superposition is a fundamental principle that allows particles, such as electrons, to be in multiple states or locations simultaneously. This principle is radically different from our everyday experiences and classical physics, where an object can only be in one state or place at a time.

Imagine you have a coin, and you flip it into the air. In the everyday world, the coin can only be either heads or tails when it lands. But if the coin were governed by quantum mechanics, it could be in a state where it’s both heads and tails at the same time while it is in the air.

This state of being in multiple states at once remains as long as we do not measure the system. In the case of the coin, this would be like not looking at the coin to see whether it is heads or tails. In quantum mechanics, when we finally “look” (i.e., make a measurement), the superposition collapses into one of the possible states.

One of the thought experiments that was intended to illustrate the concept of superposition in quantum mechanics is Schrödinger’s cat, devised by Austrian physicist Erwin Schrödinger in 1935.

Quantum states are the key part of the Schrödinger’s cat thought experiment. The cat’s fate is entangled with the quantum state of the radioactive atom. And because in quantum mechanics, particles can exist in multiple states at once, known as superposition, the act of measuring or observing a quantum system is said to ‘collapse’ the superposition into one of the possible states.

Penrose suggests that when these quantum states reach a certain level of instability, they collapse and that the outcomes of these collapses are non-computable, meaning they cannot be solved or predicted by any algorithm within a finite amount of time or with finite resources. Therefore, you can not know what would be the outcome. Penrose suggests that perhaps the process that leads to quantum state collapse, is the same process that leads to consciousness. And that is where the theory makes a significant leap: the non-computable outcomes of these collapses lead to moments of conscious awareness.

Silicon-based computers can never achieve true consciousness

With the theory, Penrose has argued that consciousness involves non-computable processes in the human brain. While Penrose and Hameroff have proposed the Orch-OR theory as a potential explanation for consciousness, Penrose has acknowledged that the exact mechanism by which quantum state collapse could give rise to conscious experience is not yet well understood.

However, if the argument that consciousness involves non-computable processes is true, it suggests that creating consciousness cannot be fully replicated by the computer that we have today, which operates entirely on computable functions. If consciousness does require non-computable processes, the silicon-based computer would not be capable of achieving true consciousness. We have to find a new type of system/technology that is different from the current computers to create a machine with consciousness.

What this means is that we need a system that has biological properties or a system that contains non-computable properties, but I have no idea what that could be. This Orch-OR theory remains theoretical and a topic of debate and further research.

Conclusion

As we have journeyed through the history of AI and witnessed the evolution of AI, one fact stands: the definition of AI is as fluid as the technology itself. The debates surrounding AI’s nature and capabilities are far from settled.

I, however, are convinced that intelligence and consciousness cannot be just computational. For a machine to be conscious, it needs more than just mimicking the human brain by simulating neurons. Let’s wait for folks who are smart enough to understand human intelligence and design systems that take “real intelligence” from humans into account.

References

John McCarthy: Thinking Allowed, DVD, Video Interview

Consciousness in the universe: A review of the ‘Orch OR’ theory — ScienceDirect

Sir Roger Penrose & Dr. Stuart Hameroff: CONSCIOUSNESS AND THE PHYSICS OF THE BRAIN — YouTube