Getting structured information out of images

Posted on

Using the latest and most advanced OpenAI’s model called GPT-4o

OpenAI recently releases GPT-4o — which is claim to be the best OpenAI’s AI model at half price (as compared to GPT4)! This new model offers real-time multimodal capabilities in text, vision, and audio with same level of intelligence as GPT4turbo but is much more efficient. This means that it has lower latency, it generates text 2x faster and very importantly, is half the price of GPT4Turbo.

Motivation

If you need to analyse images to collect structured information, you are in the right place. In this article, we will quickly learn how to get the structured information out of the image using the latest and most advanced model of OpenAI model — GPT-4o.

Workflows

First import the relevant libraries:

import base64

import json

import os

import os.path

import re

import sys

import pandas as pd

import tiktoken

import openai

from openai import OpenAI

For this article, I will be using the following image for demonstration purpose. You can apply the same principle on your images to ask any kind of question:

Step 1: Load and Encode Images

Images are made available to the model in two main ways: by passing a link to the image or by passing the base64 encoded image directly in the request. Base64 is an encoding algorithm that can convert images into a readable string. You can pass the image in the user, system and assistant messages.

Since I have my image stored locally, let’s encode the local image into base4 url. The following function reads the image file, determines its MIME type, and encodes it into a base64 data URL, which is then suitable for transmission to the API:

import base64

from mimetypes import guess_type

# Function to encode a local image into data URL

def local_image_to_data_url(image_path):

# Guess the MIME type of the image based on the file extension

mime_type, _ = guess_type(image_path)

if mime_type is None:

mime_type = “application/octet-stream” # Default MIME type if none is found

\# Read and encode the image file

with open(image\_path, "rb") as image\_file:

base64\_encoded\_data = base64.b64encode(image\_file.read()).decode("utf-8")

\# Construct the data URL

return f"data:{mime\_type};base64,{base64\_encoded\_data}"

Step 2: Set Up the API Client and model

To build the pipeline, let’s first set up the OpenAI API client with our API key:

openai.api_key = ‘your api key’

client = OpenAI(api_key = openai.api_key)

model = “gpt-4o”

This step initializes the OpenAI client, allowing us to interact with the GPT-vision model. Now, we are ready to run the pipeline.

Step 3: Run the GPT 4o pipeline to process the image and get responses

In this code, we iterate through the images in the specified directory, encode each image, and send a request to the GPT-4o model. The model is instructed to analyze the image and extract structured information, which should be returned in JSON format.

#define the base directory for the images, encode them into base64, and use the model to extract structured information:

base_dir = “data/”

data_urls = []

responses = []

path = os.path.join(base_dir, “figures”)

if os.path.isdir(path):

# use the image and ask question

for image_file in os.listdir(path)[:1]:

image_path = os.path.join(path, image_file)

try:

data_url = local_image_to_data_url(image_path)

response = client.chat.completions.create(

model=model,

messages=[

{

“role”: “system”,

“content”: """

You are `gpt-4o`, the latest OpenAI model that can interpret images and can describe images provided by the user

in detail. The user has attached an image to this message for

you to answer a question, there is definitely an image attached,

you will never reply saying that you cannot see the image

because the image is absolutely and always attached to this

message. Answer the question asked by the user based on the

image provided. Do not give any further explanation. Do not

reply saying you can’t answer the question. The answer has to be

in a JSON format. If the image provided does not contain the

necessary data to answer the question, return ’null’ for that

key in the JSON to ensure consistent JSON structure.

""",

},

{

"role": "user",

"content": \[

{

"type": "text",

"text": """

You are tasked with accurately interpreting detailed charts and

text from the images provided. You will focus on extracting the price for all the DRINKS from the menu.

Guidelines:

- Include all the drinks in the menu

- The output must be in JSON format, with the following structure and fields strictly adhered to:

- dish: the name of the appetizer dish

- price: the price of the appetizer dish

- currency: the currency

""",

},

{"type": "image\_url", "image\_url": {"url": data\_url}},

\],

},

\],

max\_tokens=3000,

)

content = response.choices\[0\].message.content

responses.append(

{ "image": image\_file, "response": content}

)

except Exception as e:

print(f"error processing image {image\_path}: {e}")

responses

The responsesoutput variable is returned in json format as requested in my prompt. It looks as follows and it seems to be correct given the reference photo. Awesome!

```json

[

{

“drink”: “Purified Water”,

“price”: 3.99,

“currency”: “$”

},

{

“drink”: “Sparkling Water”,

“price”: 3.99,

“currency”: “$”

},

{

“drink”: “Soda In A Bottle”,

“price”: 4.50,

“currency”: “$”

},

{

“drink”: “Orange Juice”,

“price”: 6.00,

“currency”: “$”

},

{

“drink”: “Fresh Lemonade”,

“price”: 7.50,

“currency”: “$”

}

]

```

Step 4: Turn JSON format into a dataframe

You could also create a more structured data format by parsing the json output into a dataframe using the following code:

def format_output_qa(output):

try:

# cleanup the json output

output_text = output.replace("\n", “”)

output_text=output_text.replace("```json", “”)

output_text=output_text.replace("```", “”)

if debug is True:

return output_text

output_dict = json.loads(output_text)

# create a df

df = pd.DataFrame(output_dict)

except Exception as e:

print(f"Error processing output: {e}")

df = pd.DataFrame({“error”: str(e)}, index=[0])

return df

# Now process each response in the list

df_output = pd.DataFrame()

for response_dict in responses:

response = response_dict[“response”]

df = format_output_qa(response)

df[“image”] = response_dict[“image”]

df_output = pd.concat([df_output, df], ignore_index=True)

df_output

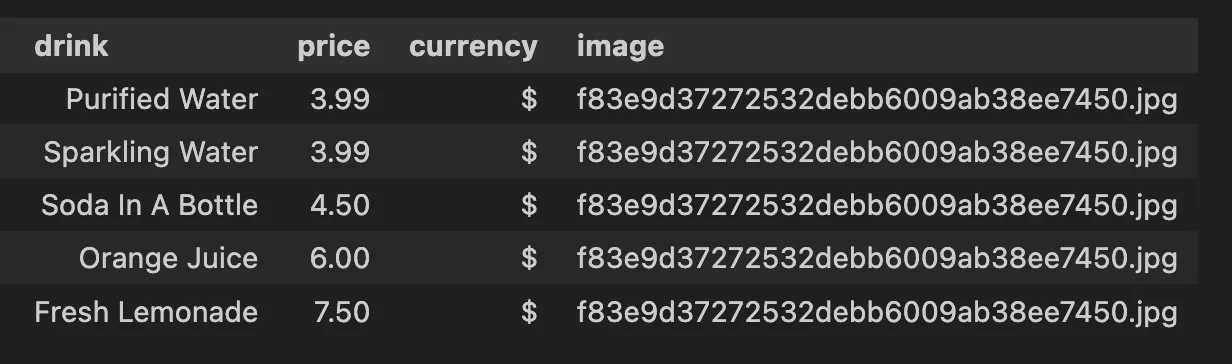

And the result will be as following:

Conclusion

This is a simple yet powerful pipeline that leverages GPT-4o’s vision capabilities to extract data from images, transforming unstructured visual data into structured data for further analysis. Whether you are working on collecting and analyzing visual content, these steps provide a robust foundation for integrating image processing into your AI workflows.

Thanks for reading! I love writing about data science concepts and playing with different AI models and data science tools. Feel free to connect with me here on Medium or on LinkedIn.