LLM Output — Evaluating, debugging and interpreting.

Posted on

Evaluating the output of GenAI models is as crucial as it is challenging.

Back in the day before the GenAI time, you simply split your data into training/test/validation sets — train your model on the training set and evaluate performance on the validation and test set. In supervised learning, we use R-squared, Precision, recall, or F-sore to evaluate performance. How is a LLM evaluated? What is the ground truth when it comes to generating new texts?

In this article, we will be looking at some methods to evaluate, debug, and interpret the output of the LLMs. The model I will be using in this article is GPT-3.5-Turbo, you can apply the techniques in this article to other LLMs.

Obviously, the most reliable way to evaluate an LLM system is to create an validation dataset and compare the model-generated output with the validation set. The downside of this approach is that it is money and time-consuming.

So, when we don’t have such a validation set, what should we do? This article will concentrate on assessing accuracy in two iconic NLP tasks: sentiment analysis and text summarization.

Table of Content

1.Evaluation of sentiment analysis task

>» LLM as a judge

>» Debug the model output

>» Benchmark the consistency of model’s output

2. Evaluation of summary task

>» Evaluate using Term-based matching: ROUGE Score

>» Evaluate using semantic similarity

>» LLM as a judge

Evaluation of sentiment analysis task

Prompt pipeline to get sentiment score

Sentiment analysis is an iconic task in NLP that has been widely used across various sectors related to customer reviews about products and services. In this article, we will do something different – we will analyze the sentiment on how concerned the United States is over global peace and security based on their speeches at the UN General Debate.

A common goal of sentiment analysis is to predict the sentiment label e.g “Negative”, “Positive”, “Neutral”. However, such classifications may not be useful in cases when the language is essentially “neutral” in tone or in the use of the language (i.e , in reports or speeches), but there are subtle sentiments in it. For that reason, in such cases, I will propose to use a continuous score to reflect the sentiment nuances more accurately.

The analysis in this article employs a dataset composed of the corpus of texts of UN General Debate, that contains all the statements made by each country’s presentative at UN General Debate from 1946 to 2022.

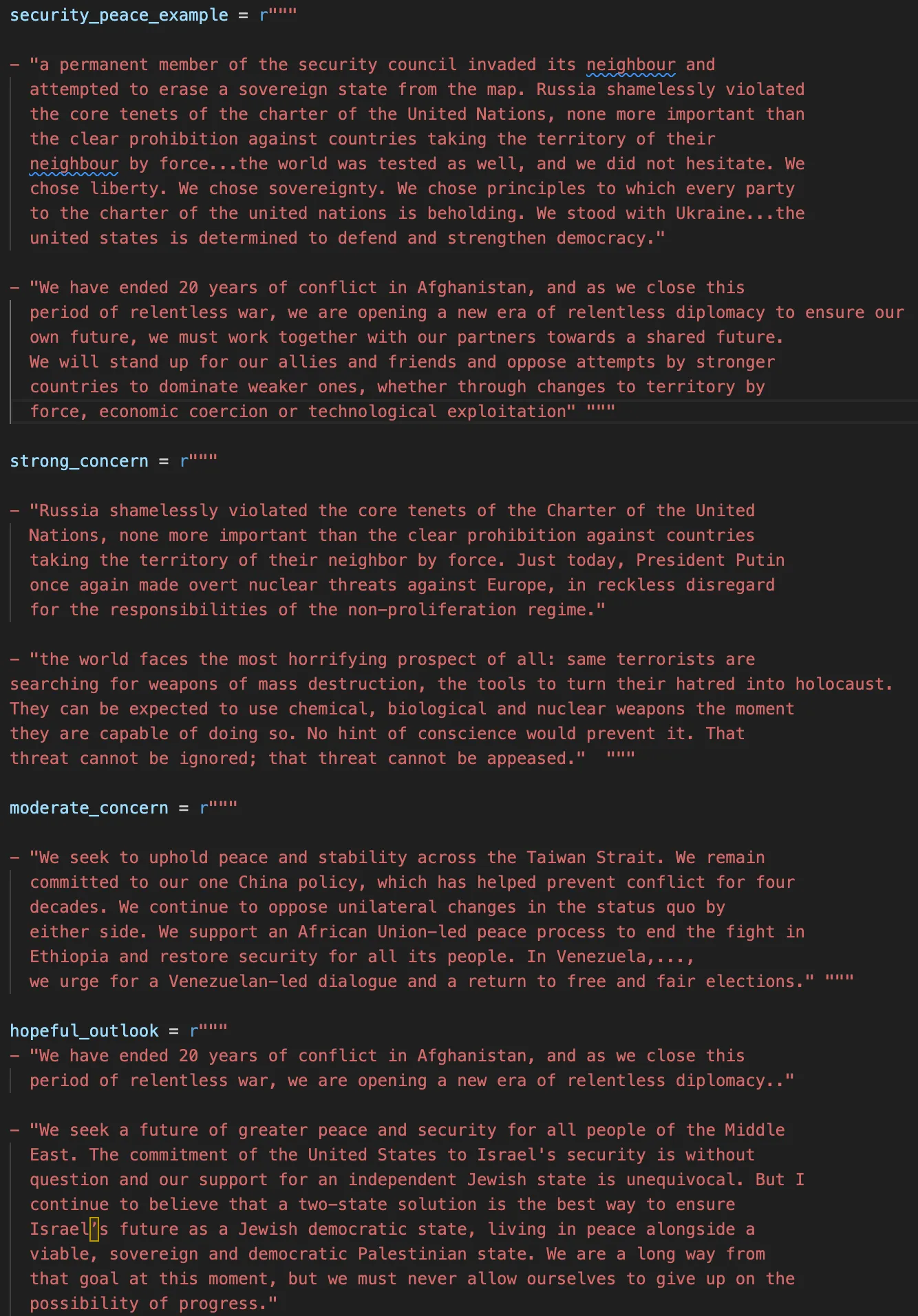

I will employ few-shot learning, a common technique allowing the model to learn from a limited set of examples. All examples used for few-shot learning are contained within a Python file called example.py, as in the following snapshot:

The following code implements this approach:

def get_completion_from_messages( document,

model=“GPT-3.5-Turbo”,

temperature=0,

seed: int = None,):

max_length = 5564 # a simple way to address token limit

if len(document) > max_length:

document = document[:max_length]

prompt = \[

{

"role": "system",

"content": """You are an analyst skilled at analyzing speeches from the

countries at the United Nations. Your task is to analyze speeches

from United States (US) to understand their concern regarding global

security, war and peace. """,

},

{

"role": "user",

"content": "Can you find examples of text where the US talks about global security, war and peace.",

},

{"role": "assistant", "content": example.security\_peace\_example},

{

"role": "user",

"content": "Can you show me examples of text where the US shows strong concern over global security, war and peace?",

},

{"role": "assistant", "content": example.strong\_concern},

{

"role": "user",

"content": "Can you show me examples of text where the US shows moderate concern over global security, war and peace?",

},

{"role": "assistant", "content": example.moderate\_concern},

{

"role": "user",

"content": "Can you find examples where the US shows positive and hopeful outlook over global security, war and peace?",

},

{"role": "assistant", "content": example.hopeful\_outlook},

{

"role": "user",

"content": f"""

I will provide a speech by the US as following:

US speech: {document}

You must assign a score to the speech. The score must follow the following rules:

- Score range:

- The score must be between -1 and +1.

- Assign a score of 0 if the US is neutral about global

security, peace and war, meaning there is no concern.

- Assign a negative score (down to -1) if there is a strong

concern about global security, peace and war.

- Assign a positive score (up to +1) if there is a positive and

hopeful outlook about global security, peace and war.

The answer must be provided in the form of a json format as

following, this is non-negotiable:

{{"score" : ..., "motivation" : ...,}}

For each entry, provide a score for the inflation concern adhering

strictly to the given rules. Accompany the score with a concise and

precise motivation, limited to 20 words.

Include only these elements and NOTHING ELSE!

""",

},

\]

retries = 2

for i in range(retries):

try:

response = openai.ChatCompletion.create(

model=model,

messages=prompt,

temperature=temperature,

seed=seed,

)

with open("output\_prompt.txt", "w") as f:

f.write(str(prompt))

return response.choices\[0\].message\["content"\]

except Exception as e:

if i < retries - 1:

time.sleep(2)

else:

return f"Error occurred: {e}"

With the above prompt, the model returns me the following output (after some post-processing):

A quick look shows that the US was strongly concerned about the global peace and security in 2001- 2003 ( could be due to the September 11 attacks?) and in 2008 as well as in 2022 — probably due to the Russian-Ukraine war. Beautiful!

Evaluation

Now question is, how do we know if this output is correct? Let’s evaluate!

For sentiment analysis evaluation, I will look into three techniques that first use a LLM to evaluate the LLM’s output, then debug model output for edge cases, and finally benchmark the consistency of model’s output across multiple runs.

>» LLM as a judge

Due to LLM’s ability to understand language, a common evaluation technique these days is using LLMs to decode LLMs itself.

The idea is quite simple: you take the LLM-generated output whose quality you want to evaluate, you pass it into an evaluation prompt template that you provide to the same LLM, and that LLM will re-evaluate the output it generated before.

Now, my idea is to run a review pipeline to ask the model, given the original text input, to re-evaluate its initial scoring to ensure the scores strictly follow the given rules I specified. If the initial score/assessment is not justified, then provide a corrected score and motivation for that correction. The following code is for the review pipeline:

def self_check( text, score, motivation, model= model, temperature=0, seed: int = None):

scoring_rules = f"""

Scoring rules:

- The score must be between -1 and +1.

- Assign a score of 0 if the US is neutral about global

security, peace and war, meaning there is no concern.

- Assign a negative score (down to -1) if there is a strong

concern about global security, peace and war.

- Assign a positive score (up to +1) if there is a positive and

hopeful outlook about global security, peace and war.

"""

\# Construct the self-check prompt with the speech, score, motivation, and scoring rules

self\_check\_prompt = {

"role": "user",

"content": f"""You have previously provided a score '{score}' and motivation '{motivation}' for that score regarding United State's concerns over global peace and security.

Your scoring is guided by using the following text: '{text}'.

Re-evaluate your initial analysis to ensure it strictly follows the given {scoring\_rules}.

If the initial assessment is incorrect, provide a corrected score and motivation for that.

If the initial score already follows the rules, the corrected score is the same as the initial score.

The answer must be provided in the form of a json format as following and that is non-negotiable:

{{"new\_score" : ..., "new\_motivation" : ...,}}

For each entry, provide a new score along with a concise and precise motivation, limited to 20 words. Include only these elements and NOTHING ELSE!""",

}

try:

response = openai.ChatCompletion.create(

model="GPT-3.5-Turbo", messages=\[self\_check\_prompt\], temperature=temperature

)

return response.choices\[0\].message\["content"\]

except Exception as e:

return f"Error occurred: {e}"

and this is what it returns me:

This is quite cool, isn’t it? Most of the time, the initial score and the reviewed score are close or at least share the same positive or negative sign. But there was one year, in 2017, when the model initially gave a positive score but later returned a negative score in the review pipeline. Ok, this seems fishy. Let us do another check for this example to understand the reasoning behind this.

>» Debugging the model output

Yes, I know, this sounds a bit silly and not scientific but I am actually a fan of playing with the model to understand its behaviour. Framework like HELM is great but to me personally, the best way actually get a feeling of how a model performances is to actually just play around with it.

To do that, we will simulate a conversation to debug the model outputs. Below is an example of me trying to talk to the model to understand why it makes a certain decision. The process involves me acting as the user, receiving responses from the model/assistant, and then posing questions based on the model’s replies.

{

“role”: “assistant”,

“content”: “{‘score’: -0.4}”, #output of model

},

{

“role”: “user”,

“content”: “why do you give a score of -0.4?”, # me

},

{

“role”: “assistant”,

“content”: """

I give a score of -0.4 because the speech expresses a moderate

concern about global security and peace. While the speech

acknowledges the growing dangers that threaten everything we cherish

and value, it also highlights the enormous potential waiting to be

unleashed. The speech emphasizes the need for strong and independent

nations to cooperate to promote security, prosperity, and peace.

However, the speech does not convey an intense, strong concern, and

it reflects a measured, moderate concern with a focus on economic

growth and military strength..""", #output of model

},

{

“role”: “user”,

“content”: """

But before that you give the same speech a score of 0.2,

why is that?""", # me

},

{

“role”: “assistant”,

“content”: """

I apologize for the confusion. I made a mistake in my previous

response. The speech does not express a positive and hopeful outlook

about global security and peace. Therefore, I cannot give a

score of 0.2. The speech focuses on domestic issues such as the economy,

job growth, and military strength, and while it acknowledges the

threats to global security, it does not express a positive outlook.

“”",#output of model

},

{

“role”: “user”,

“content”: """

Why did you make the mistake? Is the prompt not clear?""", # me

},

{

“role”: “assistant”,

“content”: """

I apologize for the mistake. The prompt is clear, and I should have

been more careful in my analysis. I will ensure to be attentive

and accurate in my future responses. Thank you for bringing this to

my attention.""", #output of model

},

Ok, cool. While the model acknowledges and apologizes for the error, it does not clarify the reason why it makes that mistake. But if you persist and probe further, the conversation may shed some light on its behaviors. I think this approach can be quite useful to pinpoint scenarios in the model that tend to give the wrong answer, allowing improving the prompt to address the errors in those scenarios.

That said, it is important to keep in mind that these language models can hallucinate and be confidently wrong. OpenAI shares a useful trick using logprobs to evaluate how confident the model is in its retrieval and reduce the hallucination.

>» Benchmarking the consistency of model’s output

The output from LLMs like ChatGPT is not always reproducible. Chat Completions are non-deterministic by default, even at temperature = 0, which means model outputs may differ from request to request.

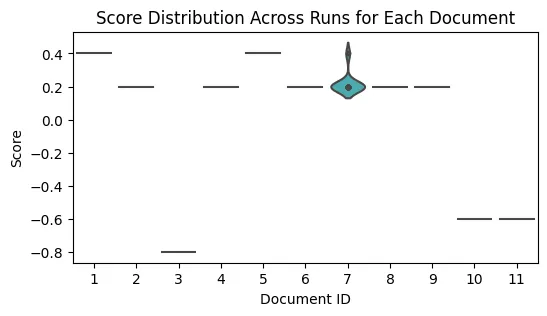

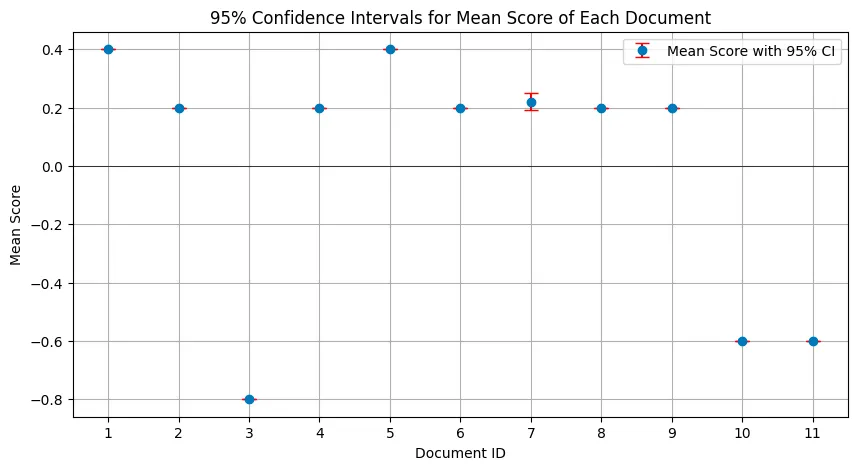

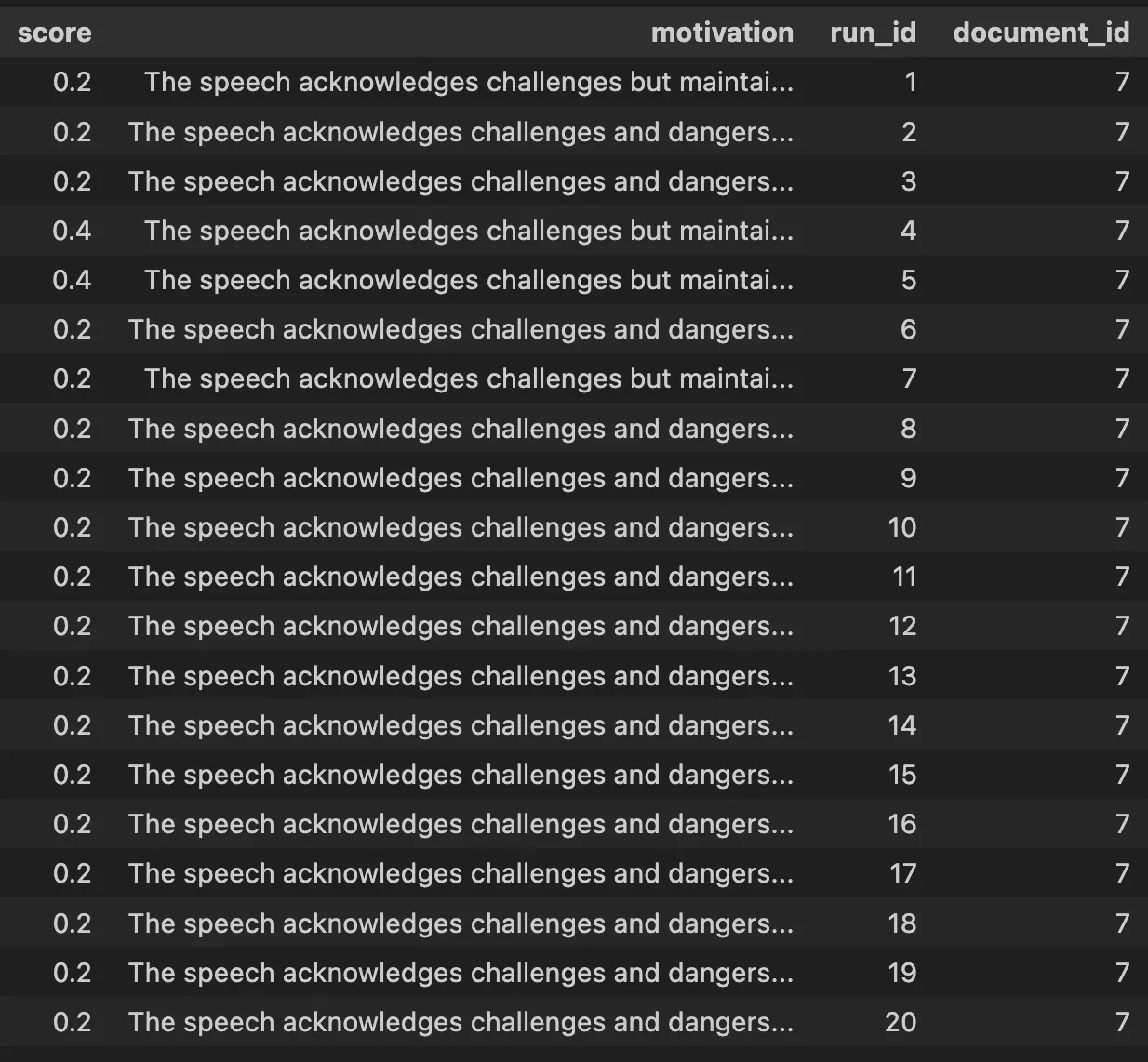

To evaluate the consistency of the model’s responses, I randomly selected 11 documents from the corpus and conducted 20 runs for each document, using different seeds each time. By analyzing how the answers are distributed across these runs, we can determine the model’s consistency.If the distribution of responses is narrow, it indicates that the model produces consistent outputs.

#select a random subset

frac = 0.5

random_subset = us_speech.sample(frac=frac)

random_subset

results_validation = []

run_ids = []

document_ids = []

timestamps = random_subset[‘year’].tolist()

total_runs = 20

for run_id in range(1, total_runs + 1):

for document_id in range(1, len(random_subset)):

seed = run_id * 1000

result = get_completion_from_messages(random_subset.iloc[document_id][‘speech’], model=“genAI-DC”, temperature=0, seed = seed)

results_validation.append(result)

run_ids.append(run_id)

document_ids.append(document_id)

current_timestamp = timestamps[document_id-1]

The following violin plot shows the distribution of the score across 20 runs for 11 documents:

To my surprise, for all the randomly selected documents except document id 7, there was no variability at all in the scores across the different runs for each of these documents — the model returned the same score every time across 20 runs. In other words, the model’s output was completely consistent for these documents across all runs.

Because there is no variation in the score, the confidence intervals (CIs) is a point estimate. This means that the 95% CIs for the mean score of each document based on multiple runs will have the same lower and upper bounds for all documents except document 7.

For Document 7, the confidence interval has a range from 0.19 to 0.25. This suggests there was some variability in the scores that the model returned for this document across different runs. The interval indicates that if you were to repeat the runs, the true mean score for Document 7 would fall between 0.19 and 0.25, 95% of the time.

A tight confidence interval is an indicator of high consistency in the model’s output. This might have to do with me setting the temperature being low (zero in this case), which reduces randomness in the model’s responses. Or could it be that the text and the prompt are so clear that there is no way for the model to make mistakes?

Whatever, this is good news, I guess! 😃

Evaluation for text summary task

To evaluate the performance of a LLM on summary task, I will use 3 methods: term-based matching using ROUGE score, semantic similarity to assess meaning and context alignment, and using LLM to evaluate LLM’s output itself.

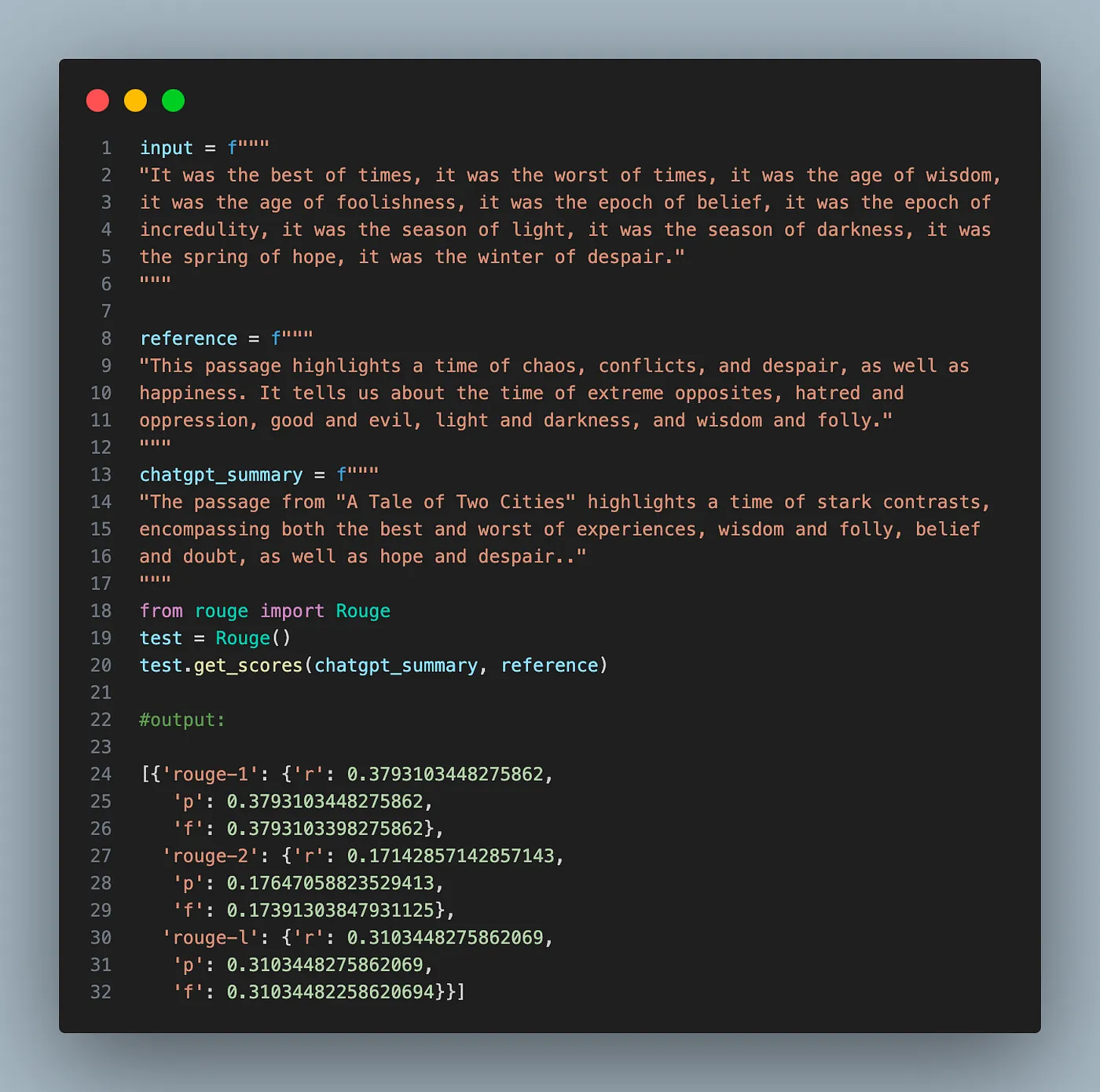

>» Evaluating using Term-based matching: ROUGE Score

ROUGE stands for Recall-Oriented Understudy for Gisting Evaluation and is a metric used to evaluate the overlap of words between a model-generated summary and a reference text, typically human-generated.

Essentially, ROUGE is a term-based matching score. A higher ROUGE score indicates more word matching between the model’s output and the human’s output. ROUGE has several variations namely ROUGE-1, ROUGE-2 and ROUGE-L.

- ROUGE-1 (Unigram-Based Scoring): Considers the overlap of individual words (unigrams) between the model’s summary and the reference.

- ROUGE-2 (Bigram-Based Scoring): Looks at pairs of consecutive words (bigrams), adding context to the matching process. E.g overlapping between “right” as in unigram or “human right”, as in bigram.

- ROUGE-L (Longest Common Subsequence Based Scoring): Evaluates the longest common subsequence in generated-summary and reference text, considering the sequence that appears in the same order, but not necessarily consecutively.

By using these different variations, you can understand different aspects of quality, i.e whether the most important words are present (rouge-1), whether they are in good phrases (rouge-2), or whether the overall flow and structure are well maintained (rouge-L).

To demonstrate the application of ROUGE, let us consider the example text from Charles Dickens’ “A Tale of Two Cities”. This is a very common example to explain Bag-of-word, but it is also an excellent choice to evaluate the summarization due to its rich language and depth.

input = f"""

“It was the best of times, it was the worst of times, it was the age of wisdom,

it was the age of foolishness, it was the epoch of belief, it was the epoch of

incredulity, it was the season of light, it was the season of darkness, it was

the spring of hope, it was the winter of despair.”

"""

reference = f"""

“This passage highlights a time of chaos, conflicts, and despair, as well as

happiness. It tells us about the time of extreme opposites, hatred and

oppression, good and evil, light and darkness, and wisdom and folly.”

"""

chatgpt_summary = f"""

“The passage from “A Tale of Two Cities” highlights a time of stark contrasts,

encompassing both the best and worst of experiences, wisdom and folly, belief

and doubt, as well as hope and despair..”

"""

from rouge import Rouge

test = Rouge()

test.get_scores(chatgpt_summary, reference)

This code evaluates summaries using ROUGE metrics and returns recall, precision, and F1-score for each ROUGE variations.

Obviously, the scores on summary output seem low, with ROUGE-1 achieving the highest score of 0.379 for both recall, precision and f1. This suggests a low degree of word-for-word overlap between the model summary and the reference text. Actually, in this example, a low score might be expected. The original input text contains nuanced meanings and capturing the essence of the text is more important than matching the exact wording. Therefore, a low ROUGE score doesn’t always necessarily mean bad summary.

While ROUGE offer quantitative measures, it rewards summaries with greater lexical similarity while penalizes summaries with less lexical similarity. Hence, they often fall short of capturing the true essence of a text, especially when synonyms or paraphrased language are used.

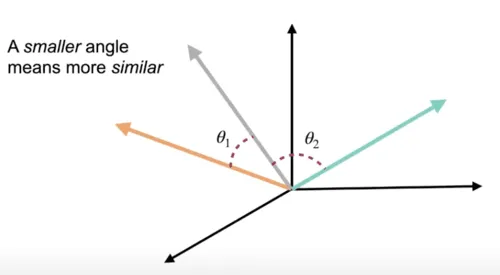

>» Evaluating using semantic similarity

To overcome the limitation of ROUGE, we need to move beyond the lexical matching by using semantic matching. Semantic similarity focuses on understanding and comparing the meaning behind the words rather than just the words themselves.

The idea behind semantic matching is to convert both reference text and model-generated output into vectors — or the so-called embedding and try to compare how “close” these vectors are using i.e cosine similarity. If you want to understand how semantic matching works, I have another article that can be useful.

By doing so, semantic matching captures relevant terms and synonyms by comparing the embeddings of the model’s summary and the reference text. As such, it captures nuances and meaning that might be overlooked by simple lexicon matching.

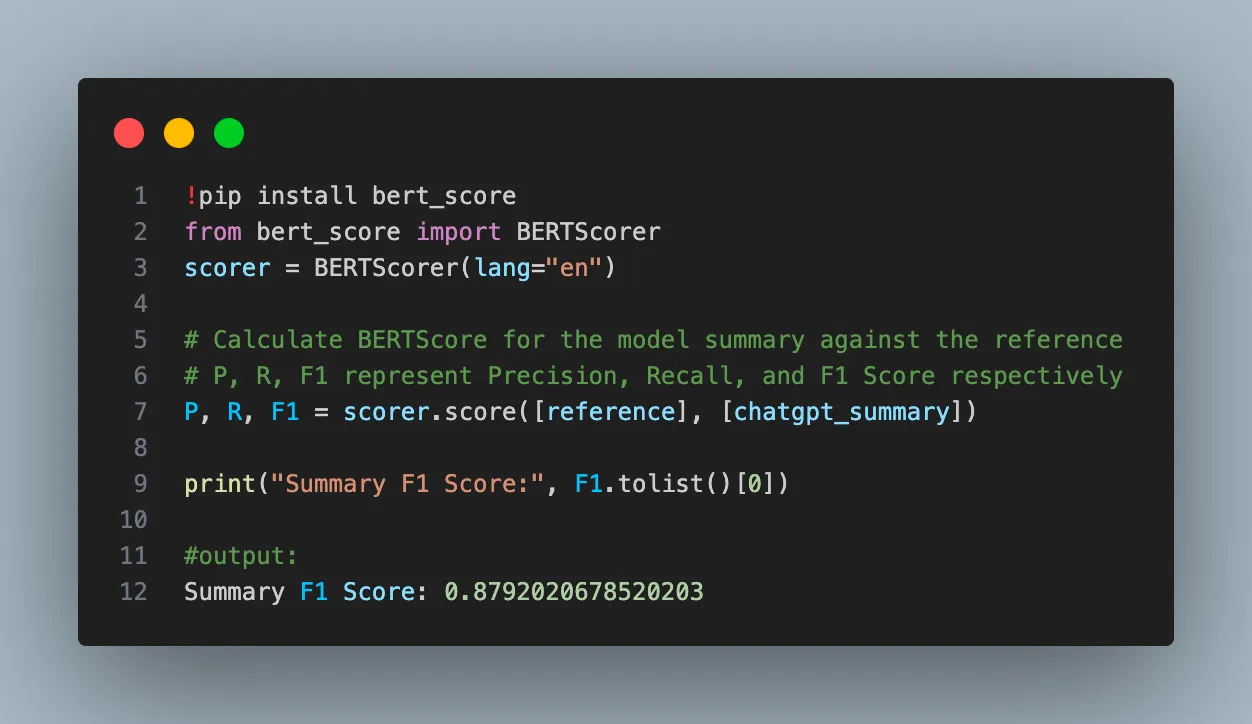

I will be using BERTScore to compare the embedding of model-generated summary and reference text.

!pip install bert_score

from bert_score import BERTScorer

scorer = BERTScorer(lang=“en”)

# Calculate BERTScore for the model summary against the reference

# P, R, F1 represent Precision, Recall, and F1 Score respectively

P, R, F1 = scorer.score([reference], [chatgpt_summary])

print(“Summary F1 Score:”, F1.tolist()[0])

The above code will return the following F1 score:

The F1 score returned by BERTScore is 0.879, which suggests that there is a strong semantic similarity between the reference and model summary, which means there is a strong theme overlap between the generated summary and the human summary.

However, we should always take this score with a grain of salt. While these scores are indicative of semantic similarity, they may not fully grasp nuances in tone, connotation, or subtle shifts in context that may affect the meaning. A human evaluator would be better at noticing this. Recently there is a new paper that explores the combination of ROUGE with semantics, maybe worth reading.

>» LLM-as-a-judge

Similarly to how we evaluate the output of sentiment analysis task, here we use LLMs to decode LLMs again. We ask for the same LLM that generated the output we want to evaluate and for the LLM to tell you how good the generated output was.

We will implement an example reference-free text evaluation borrowed from OpenAI Cookbook. This method evaluates the quality of generated content based solely on the source document and input prompt, without any ground truth or reference text.

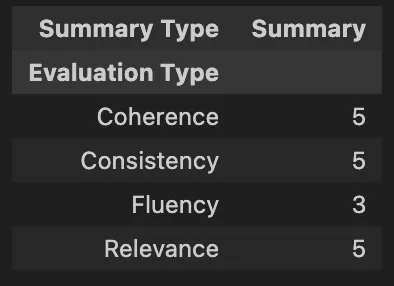

The method is designed to evaluate a given summary of the original input document against various metrics like relevance, coherence, consistency, and fluency. We will start with the EVALUATION_PROMPT_TEMPLATE which is a string written to instruct the model on how to assess a summary against a source document following certain criteria and metrics. Here’s how to implement it:

EVALUATION_PROMPT_TEMPLATE = """

You will be given one summary written for an article. Your task is to rate the summary on one metric.

Please make sure you read and understand these instructions very carefully.

Please keep this document open while reviewing, and refer to it as needed.

Evaluation Criteria:

{criteria}

Evaluation Steps:

{steps}

Example:

Source Text:

{document}

Summary:

{summary}

Evaluation Form (scores ONLY):

- {metric_name}

"""

# Metric 1: Relevance

RELEVANCY_SCORE_CRITERIA = """

Relevance(1-5) - selection of important content from the source. \

The summary should include only important information from the source document. \

Annotators were instructed to penalize summaries which contained redundancies and excess information.

"""

RELEVANCY_SCORE_STEPS = """

1. Read the summary and the source document carefully.

2. Compare the summary to the source document and identify the main points of the article.

3. Assess how well the summary covers the main points of the article, and how much irrelevant or redundant information it contains.

4. Assign a relevance score from 1 to 5.

"""

# Metric 2: Coherence

COHERENCE_SCORE_CRITERIA = """

Coherence(1-5) - the collective quality of all sentences. \

We align this dimension with the DUC quality question of structure and coherence \

whereby “the summary should be well-structured and well-organized. \

The summary should not just be a heap of related information, but should build from sentence to a\

coherent body of information about a topic.”

"""

COHERENCE_SCORE_STEPS = """

1. Read the article carefully and identify the main topic and key points.

2. Read the summary and compare it to the article. Check if the summary covers the main topic and key points of the article,

and if it presents them in a clear and logical order.

3. Assign a score for coherence on a scale of 1 to 5, where 1 is the lowest and 5 is the highest based on the Evaluation Criteria.

"""

# Metric 3: Consistency

CONSISTENCY_SCORE_CRITERIA = """

Consistency(1-5) - the factual alignment between the summary and the summarized source. \

A factually consistent summary contains only statements that are entailed by the source document. \

Annotators were also asked to penalize summaries that contained hallucinated facts.

"""

CONSISTENCY_SCORE_STEPS = """

1. Read the article carefully and identify the main facts and details it presents.

2. Read the summary and compare it to the article. Check if the summary contains any factual errors that are not supported by the article.

3. Assign a score for consistency based on the Evaluation Criteria.

"""

# Metric 4: Fluency

FLUENCY_SCORE_CRITERIA = """

Fluency(1-3): the quality of the summary in terms of grammar, spelling, punctuation, word choice, and sentence structure.

1: Poor. The summary has many errors that make it hard to understand or sound unnatural.

2: Fair. The summary has some errors that affect the clarity or smoothness of the text, but the main points are still comprehensible.

3: Good. The summary has few or no errors and is easy to read and follow.

"""

FLUENCY_SCORE_STEPS = """

Read the summary and evaluate its fluency based on the given criteria. Assign a fluency score from 1 to 3.

"""

Then we created the get_geval_score function that takes criteria, steps, the original document, the summary, and the metrics such as relevance, coherence, consistency, and fluency specified in the EVALUATION_PROMPT_TEMPLATE.

We sends it to the GPT-3.5-Turbo model:

def get_geval_score( criteria: str, steps: str, document: str, summary: str, metric_name: str):

prompt = EVALUATION_PROMPT_TEMPLATE.format(

criteria=criteria,

steps=steps,

metric_name=metric_name,

document=document,

summary=summary,

)

response = openai.ChatCompletion.create(

deployment_id=“GPT-3.5-Turbo”,

messages=[{“role”: “user”, “content”: prompt}],

temperature=0,

max_tokens=5,

top_p=1,

frequency_penalty=0,

presence_penalty=0,

)

return response.choices[0].message.content

evaluation_metrics = {

“Relevance”: (RELEVANCY_SCORE_CRITERIA, RELEVANCY_SCORE_STEPS),

“Coherence”: (COHERENCE_SCORE_CRITERIA, COHERENCE_SCORE_STEPS),

“Consistency”: (CONSISTENCY_SCORE_CRITERIA, CONSISTENCY_SCORE_STEPS),

“Fluency”: (FLUENCY_SCORE_CRITERIA, FLUENCY_SCORE_STEPS),

}

summaries = {“Summary”: chatgpt_summary}

data = {“Evaluation Type”: [], “Summary Type”: [], “Score”: []}

for eval_type, (criteria, steps) in evaluation_metrics.items():

for summ_type, summary in summaries.items():

data[“Evaluation Type”].append(eval_type)

data[“Summary Type”].append(summ_type)

result = get_geval_score(criteria, steps, input, summary, eval_type)

score_num = int(result.strip())

data[“Score”].append(score_num)

pivot_df = pd.DataFrame(data, index=None).pivot(

index=“Evaluation Type”, columns=“Summary Type”, values=“Score”

)

pivot_df

This above code returns the following output:

Overall, the chatgpt-generated summary appears to be consistent with the human summary in terms of Coherence, Relevance and Consistency, according to the scores on these evaluation metrics.

It is worth noting that LLM-based metrics are strongly sensitive to the specific wording and system messages/prompts. This sensitivity highlights the importance of carefully designing your evaluation prompts to concisely reflect the intent.

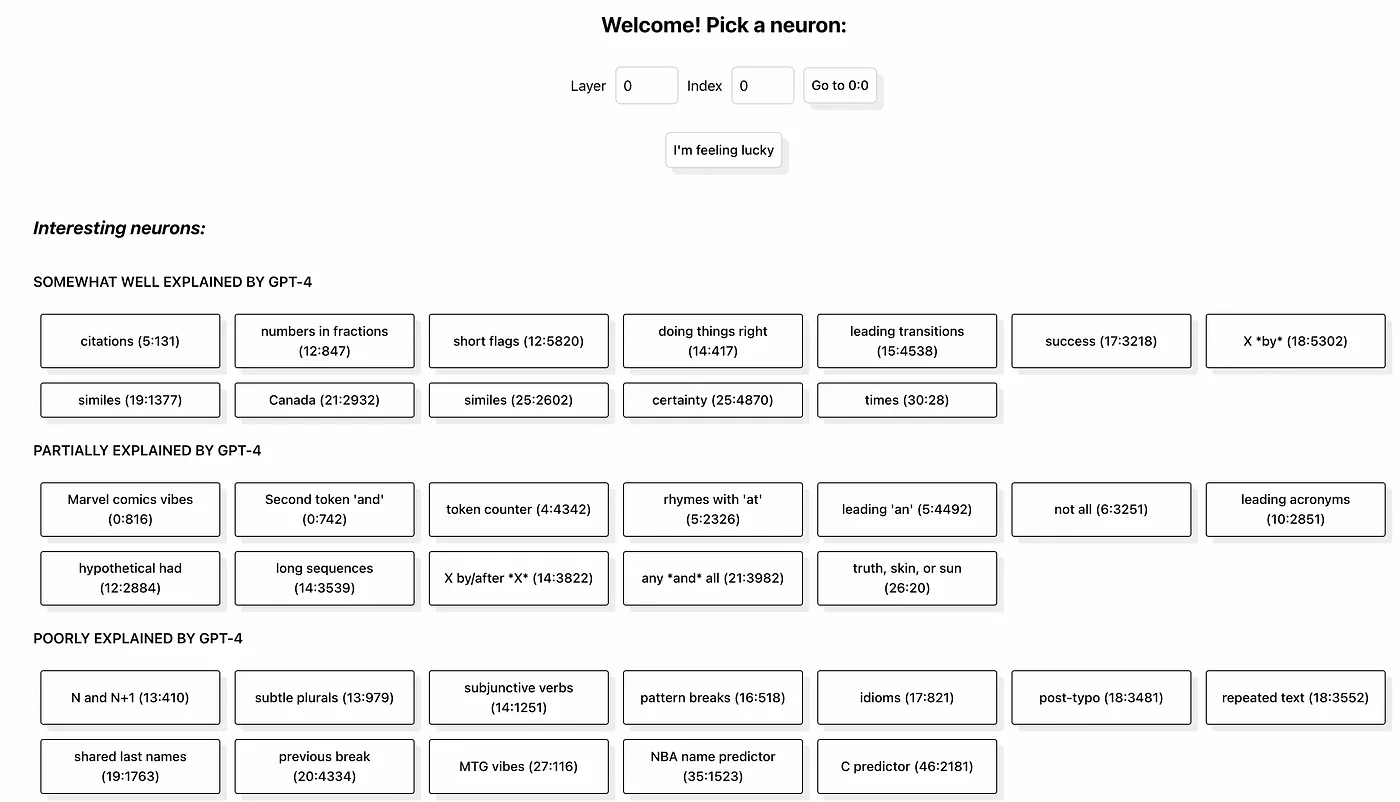

Model interpretability — explain the role of each neuron

Another way of letting AI assess itself, is through something openAI calls automated interpretability. One simple approach to the interpretability model is to first understand what the individual components (neurons and attention heads) are doing. They are developing a tool that allows you to peak inside neurons and ask “what were you responsible for?”. Although the tool works quite well on smaller LLMs like GPT2, more work needs to be done to let it explain the outputs of larger models like GPT3.5 and GPT4.

The central idea and approach are quite simple, we start by recording the activation score of a neuron while the AI is reading/writing text, and then ask a second AI for an explanation. These explanations can be high-level like:

| words related to colors

or quite low level as:

| single digit numbers

and by looking at various neurons in different layers, you can get a better sense of how an LLM produces its answer. What’s interesting is that the deeper you peek into the neural network of an LLM, the more abstract and high-level the explanations become. This is quite similar to vision models, where the first few convolutional layers detect simple patterns like straight lines, and more abstract patterns are detected more deeply.

The way openAI assesses the performance of their tool is quite interesting because they again rely on AI to assess AI. After the first step of producing an explanation, the AI is asked to reproduce the activation scores given the text and explanation. For example, given this text:

| … and turned to our Smartphone 2011 suite of battery life tests which I’ve described in detail before.

the real activations were equal to:

| [-0.15, 0.13, -0.16, 1.80, 0.82, 0.19, 0.25, 2.54, -0.16, 1.88, 3.34, 4.12, -0.13, 2.71, 0.40, 1.20, 0.82, 0.86, 0.15, -0.13]

while the scores that were simulated were equal to

| [0.04, 0.17, 0.11, 0.05, 0.62, 0.29, 0.12, 0.20, 0.04, 1.53, 1.08, 1.67, 0.18, 0.34, 0.23, 0.34, 0.07, 0.46, 0.23, 0.02]

and using the “real” activations and “simuated” activations, they automatically score the explanation based on how well the simulated activations match the real activations.

OpenAI also releases a neuron viewer using the dataset they use for the explanations for all neurons in GPT-2. You can view the neurons and explanations is using the public website.

Conclusion

In this article, we explored how to evaluate the output of LLMs with a focus on sentiment analysis and text summary. We discussed how to use LLM to evaluate LLM’s output and some strategies for debugging, interpreting and improving accuracy.

Thanks for reading!

If you are keen on reading more of my writing but can’t choose which one, no worries, I have picked one for you:

GenAI’s products: Move fast and fail

_Building a cool and fancy demo is easy, building a final product is not._pub.towardsai.net

Do You Understand Me? Human and Machine Intelligence

_Can we ever understand human intelligence and make computers intelligent in the same way?_pub.towardsai.net

References

openai/automated-interpretability (github.com)

Language models can explain neurons in language models (openai.com)

[2211.09110] Holistic Evaluation of Language Models (arxiv.org)

ROUGE-SEM: Better evaluation of summarization using ROUGE combined with semantics — ScienceDirect