Where is AI headed? It is about agents.

Posted on

“AI agent workflows will drive massive AI progress this year” — Andrew Ng

I recently watched Andrew Ng’s talk on AI agents, or what he calls agentic systems. Inspired by his insights, I would like to delve into understanding AI agents better. In this article, we will learn what agentic systems are and how to build a simple agentic system from scratch.

What is an Agentic Workflow and why is it better?

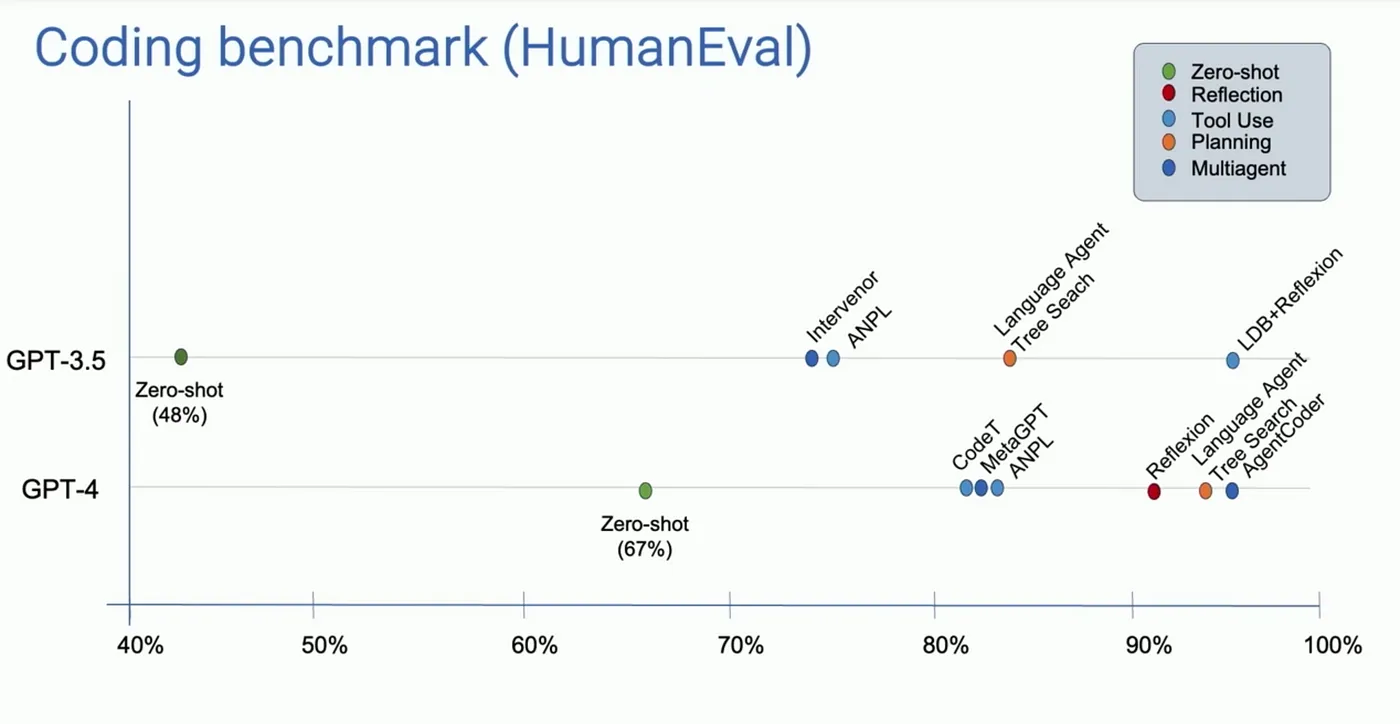

The idea of agents has been around for a while. What’s new here? In his talk, Andrew Ng explains agentic systems that use design patterns such as reflection, tool use, planning, and multi-agent collaboration to outperform the results of zero-shot prompting.

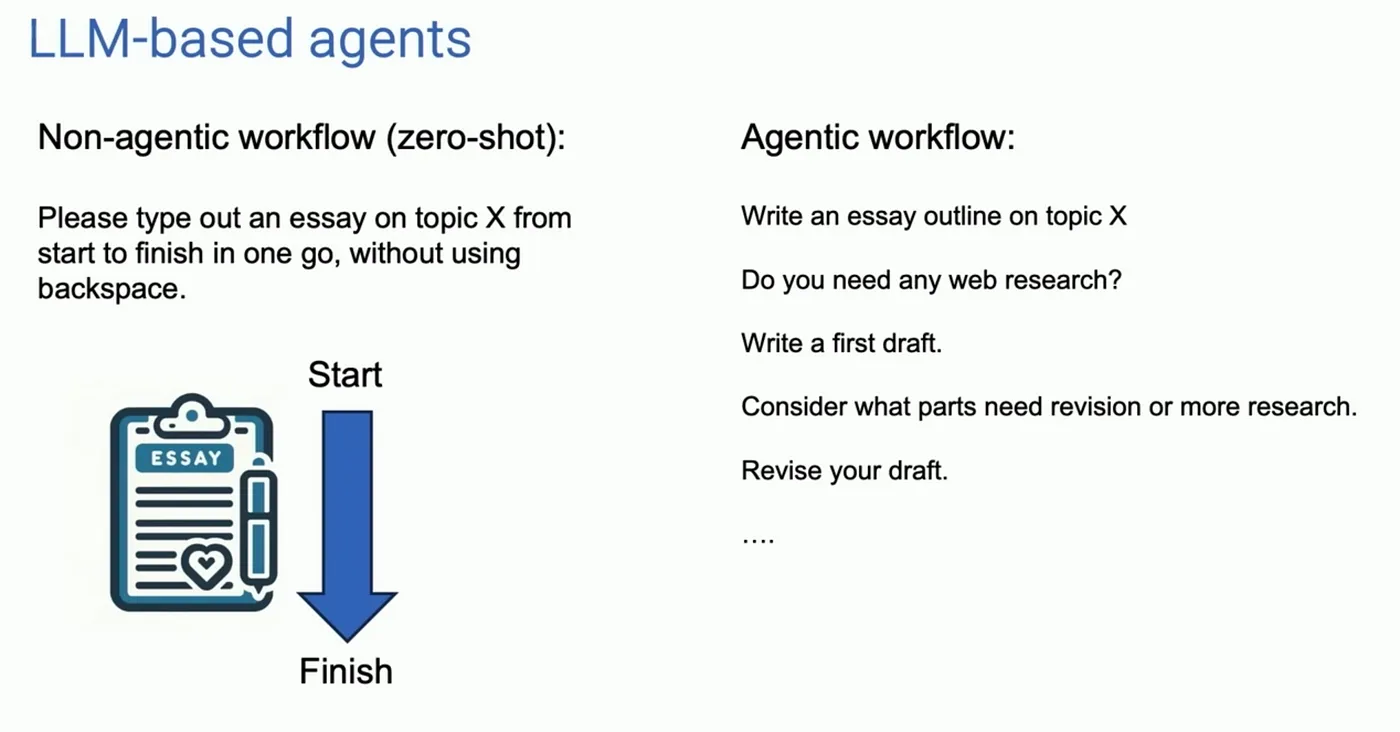

As Andrew Ng clarifies in his post, an Agent is not about prompting the model once (zero-shot). Instead, it is an autonomous entity that, given high-level instructions, plans, uses tools, and performs multiple iterative steps to achieve a goal.

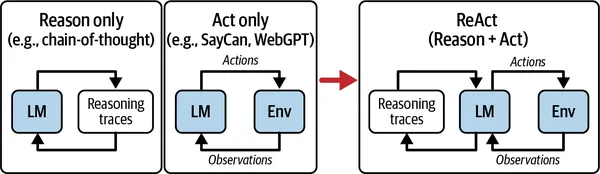

The Agent will go through Chain-of-thought reasoning — which is a process of guiding LLMs through a series of steps or logical connections to solve a complex problem. By breaking down complex problems into smaller, more manageable components, LLMs can provide more thorough and effective solutions.

We will experience “agentic moments” when we see AI that thinks through complex problems, breaking them down into smaller components, plans and executes a task without any human intervention.

The catch here is that we do not tell the Agent what to do but it decides by itself which actions to take to complete the task.

And because agentic workflows let AI work iteratively through each step, they can recover from their issues — which, in return, can yield a huge improvement in performance.

Key Components of Agentic Systems

Agentic systems are typically agents that can do all of the following:

- Reflection: Agents that analyze their own outputs and identify areas for improvement.

- Tool Use: Agents that access and utilize tools (available to them) to complete a complex task.

- Planning: Agents that think ahead, consider multiple options, and make informed decisions.

- Multi-Agent Collaboration: Multiple agents that work together to solve a big complex problems.

Agentic and non-agentic workflows:

What makes a workflow “agentic” or “non-agentic” ? For one, agents interact with an environment. In the example above, this means it will ask questions, get input, ask follow up questions, get more input and bit by bit it will create a whole essay. The more traditional “non-agentic” way, would be to start out with a single prompt and the model will create a single output. There is no opportunity for the model to get new information.

Agentic workflows can be made more complex by employing a team of agents. A complex task is broken down into subtasks to be executed by potential different roles — such as a software engineer, product manager, designer, quality assurance engineer, and so on — and have different agents accomplish different subtasks.

Interestingly, in multi-agent collaboration, each agent can be different or the same language model, but you prompt them in a different way. For example, if you want to build a software product, one agent can focus on the UI design, another can handle backend development, a third agent can manage database design, and yet another can write documentation. Each agent is given specific different instructions tailored to their task, allowing them to specialise and collaborate effectively.

Build an AI Agent from scratch

Agents can take various actions such as executing a Python function; or analyzing the data and proposing an answer to a question, the agent will observe what happens and will decide on whether it is finished or what action to take next. To demonstrate, I will build an AI agent to generate ideas for my Medium posts.

Through this exercise, we will learn about the components that make up autonomous agents, such as inputs, available actions and observations.

I will provide at high level the actions that AI could talk, including (1) getting data related to AI Agent topic from Google trend, (2) analyze the insights and (3) propose ideas for my Medium post.

The agent will continously loop through the series of actions (available to it and decided by itself) and observations until there are no further actions.

Step 1: Set up the Agent

In this step, we will create an Agent class to manage conversation with two roles: user and assistant. The class includes methods to handle messages and generate responses using OpenAI’s API. We will keep track of the conversation over time.

In the code below we initialize the agent with an empty message history, and by defining a caller and execute method, we ensure that we can easily give a message to the agent, and it will in turn record it and pass it along to the LLM.

class Agent:

# initialize

def __init__(self, system=""):

self.system = system

self.messages = []

if self.system:

self.messages.append({“role”: “system”, “content”: system})

# call

def __call__(self, message):

self.messages.append({“role”: “user”, “content”: message})

result = self.execute()

self.messages.append({“role”: “assistant”, “content”: result})

return result

#execute

def execute(self):

completion = openai.ChatCompletion.create(

model=“gpt-4o”,

temperature=0,

messages=self.messages)

return completion.choices[0].message.content

Step 2: Set up the React Prompt

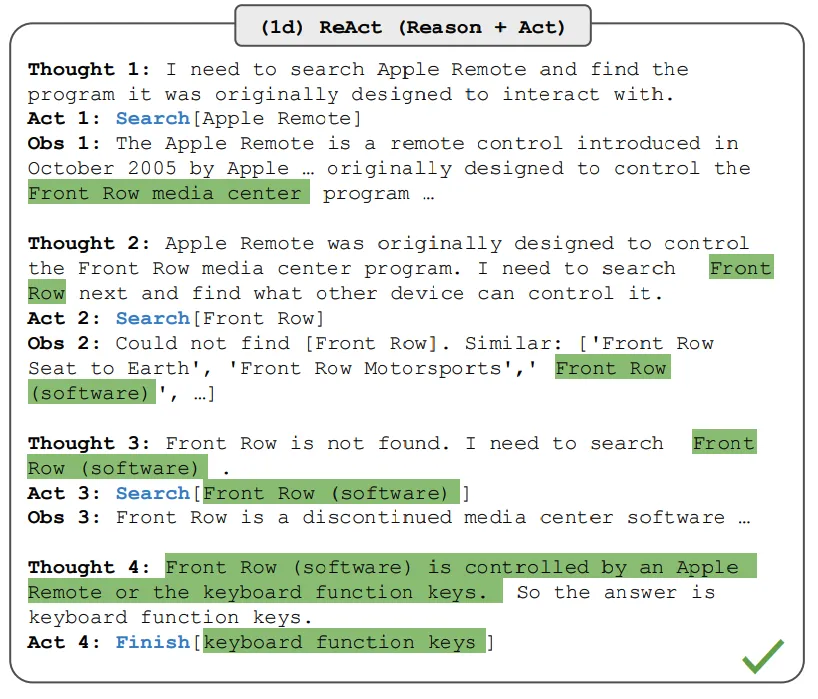

Reason and Act (ReAct)

In this step, we want to create a ReAct Agent that takes the specific system messages — our prompt into account.

There are many agent frameworks that ultimately aim to improve LLM responses and one of those was ReAct, which is an improved version of Chain-of-thought, allowing an LLM to go through its thoughts, then create observations after taking actions via tools.

These observations are again turned into thoughts about what would be the right tool/action to use within the next step. The LLM continues to reason until either a final answer is present or a maximum number of iterations has taken place:

- Interpret the environment with a thought.

- Decide on an action.

- Act.

- Repeat steps 1–4 until a final answer or max iteration is achieved .

What we are doing in this step is to create a detailed prompt explaining the loop of thought, action, and observation and finally output an answer.

A common part of an agent’s ReAct prompt will likely include the following:

You are looking to accomplish: {goal}

You run in a loop of Thought, Action, PAUSE, Observation.

Your available actions are: {tools}

And here is the ReAct prompt:

prompt = """

You run in a loop of Thought, Action, PAUSE, Observation.

At the end of the loop you output an Answer.

Use Thought to describe your thoughts about the question you have been asked.

Use Action together with an input to run one of the actions available to you - then return PAUSE.

Action input should be the Observation from the previous Action.

Observation will be the result of running those actions on those inputs.

Your available actions are:

generate_and_execute_pytrend_code:

Create the pytrend code to get the related keywords from Google Trends for the given keywords, execute the code returns the data.

analyze_trends_data:

Analyze the trends table data and returns a summary of the insights from the data.

generate_medium_post_ideas: <trend_summary>

Generates Medium post ideas based on the trend summary.

An Example session:

Question: What are the related keywords for the search term “AI agent”?

Thought: I should generate and execute the pytrend code to fetch related topics or keywords for AI agent.

Action: generate_and_execute_pytrend_code: AI agent

PAUSE

Observation:

```

0 Artificial intelligence Field of study 100

1 Intelligent agent Topic 90

2 Software agent Topic 70

3 Multi-agent system Programming paradigm 65 ```

Thought: I should analyze the trends data to get a summary of the insights.

Action: analyze_trends_data:

```

0 Artificial intelligence Field of study 100

1 Intelligent agent Topic 90

2 Software agent Topic 70

3 Multi-agent system Programming paradigm 65 ```

PAUSE

Observation: <trend_summary>

Thought: I should generate Medium post ideas based on the trend summary.

Action: generate_medium_post_ideas: <trend_summary>

PAUSE

Observation: <medium_post_ideas>

Answer: The trending topics are <trend_summary> and here are some Medium post ideas: <medium_post_ideas>

“”".strip()

Step 3. Create the actions/tools

A tool is simply a predefined function that permits the agent to take a specific action.

Now we need to provide the tools to the Agent as specified in the prompt. In this step, we will implement available actions that will be given to the Agent.

Available actions or action space are the range of permissible actions an agent can undertake to accomplish the task.

In each action function, we describe which action does what. For each action, we can, for example, execute codes or call the LLM’s API with using different prompts. In this case, I am using the same LLM model (GPT-4o) but you can choose to call different LLMs for different actions.

def generate_and_execute_pytrend_code(keyword):

def fetch_related_trending_topics(keyword):

# Initialize pytrends

pytrends = TrendReq(hl=‘en-US’, tz=360)

\# Build the payload with the specified topic

pytrends.build\_payload(\[keyword\], cat=0, timeframe='now 7-d', geo='', gprop='')

\# Fetch related topics

related\_topics = pytrends.related\_topics()

\# Extract top related topics

if keyword in related\_topics and 'top' in related\_topics\[keyword\]:

top\_related\_topics = related\_topics\[keyword\]\['top'\]

return top\_related\_topics

else:

return None

\# usage

related\_trending\_topics = fetch\_related\_trending\_topics(keyword)

return related\_trending\_topics

def analyze_trends_data(trends_data):

analysis_prompt = f"""

You are an AI assistant.

Analyze and summarize the following insight: {trends_data}

"""

response = openai.ChatCompletion.create(

model="gpt-4o",

messages=\[{"role": "system", "content": analysis\_prompt}\],

temperature=0

)

return response.choices\[0\].message\['content'\]

def generate_medium_post_ideas(trend_summary):

analysis_prompt = f"""

You are a technical content writer. Based on the analysis

from the Google trend data for the given keyword and its

trend summary, you will generate topic ideas for Medium post.

The trend_summary is as follows:

=======

{trend_summary}

=======

"""

response = openai.ChatCompletion.create(

model="gpt-4o",

messages=\[{"role": "system", "content": analysis\_prompt}\],

temperature=0

)

return response.choices\[0\].message\['content'\]

And then, we will create a dictionary called known_actions to map action names to the functions. This allows the agent to dynamically call the appropriate functions that it decides to based on the action specified in the prompts. The agent chooses which actions to take, which is the result of the thought process it goes through:

# Known actions dictionary that are made available to the agents

known_actions = {

“generate_and_execute_pytrend_code”: generate_and_execute_pytrend_code,

“analyze_trends_data”: analyze_trends_data,

“generate_medium_post_ideas”: generate_medium_post_ideas,

Step 4: Automate the agent through all the actions

Below we define the thought loop for the agentic workflow. The loop in the query function manages the interaction between the user and the agent. It starts by initializing the agent with a prompt and processes the user’s question. In each iteration, the agent generates a response with a thought, identifies any actions (available to it) within the response and executes the corresponding functions from the known_actions dictionary. The resulting observation is then used to update the next prompt. This process continues for a specified number of turns or until no further actions are identified:

# Regex for action selection

action_re = re.compile(’^Action: (\w+): (.*)$’)

# Function to query and handle actions

def query(question, keyword, max_turns=5):

# initialize the agent

i = 0

bot = Agent(prompt)

next_prompt = question

observation = ""

# run through the loop for max_turn - numbers of times the agent thinks and responds

while i < max_turns:

i += 1

# interaction with the agent, it produces the output

result = bot(next_prompt)

\# identify actions

actions = \[

action\_re.match(a)

for a in result.split('\\n')

if action\_re.match(a)

\]

if actions:

\# if there is an action to run

action, action\_input = actions\[0\].groups()

if action not in known\_actions:

raise Exception(f"Unknown action: {action}")

if action == "generate\_and\_execute\_pytrend\_code":

observation = known\_actions\[action\](keyword)

else:

observation = known\_actions\[action\](action\_input)

print("Observation:", observation)

next\_prompt = f"Observation: {observation}"

else:

return

# usage

keyword = “AI Agent”

question = “Give me some ideas for Medium posts related to the topic ‘AI agent’.”

query(question, keyword)

And here is the output. As you can see, the agent start with its thought which drives which actions to take and finally outputs the answer:

This setup allows the agent to generate Medium post ideas by going through a process with different actions. The only thing I provided to the agent is the available actions it can take. I did not tell it the order of executing the actions. It is the agent who decides on first getting trends data from Google Trends, analyzing the data, and then generating relevant content ideas.

You can also veto what the agent produces to get a better output by adding more critical actions in your workflows.

Congratulations! You now have a good hand on how to build your own agent. The example we use in this post is quite simple, but you can definitely build complex agents using the same principle. I am curious to hear about your agents, let me know in the comments!

Thanks for reading!

I love writing about data science concepts and playing with different AI models and data science tools. Feel free to connect with me here on Medium or on LinkedIn. If you are keen on reading more of my writing but can’t choose which one, no worries, I have picked some for you:

Getting structured information out of images

_Using the latest and most advanced OpenAI’s model called GPT-4o_levelup.gitconnected.com

GenAI’s products: Move fast and fail

_Building a cool and fancy demo is easy, building a final product is not._pub.towardsai.net

Do You Understand Me? Human and Machine Intelligence

_Can we ever understand human intelligence and make computers intelligent in the same way?_pub.towardsai.net

LLM Output — Evaluating, debugging and interpreting.

_LLMs are not useful if they are not sufficiently accurate._pub.towardsai.net